In late 2025, nothing is more fascinating than seeing AI generate videos, pictures, texts, and numbers like never before. Students are facinated by how easy it is today to finish school assignemnts whether it's essays or maths questions; business people are fascinated by how fast it is to write a marketing copy and generate a poster for social, to brainstorm a project idea for a campaign, founders (like me, the smallest of all and i mean it) are impressed by how easy it is to do market research before launching a product and how ChatGPT, Grok or Gemini can help provide a quick skeleton of a potential business plan; academics are shocked to obtain analysis insights in less than 40 minutes, something that would have taken them days, if not weeks of hard work, research and analysis; everybody seems to be captured by how massive the impact of AI is having today. On one hand.

And on the other hand, we have companies behind the AI models powering tools like ChatGPT, challenged by the public expectation of having an Artificial General Intelligence that should solve all their academic, professional, social, and even medical pains in a shot of a prompt ("Between AGI and ASI", from AI, by Kenn Kibadi).

Between the user expectation and the companies building the AI, there is an almost unsolvable tension.

AI Companies do their best with benchmarks.

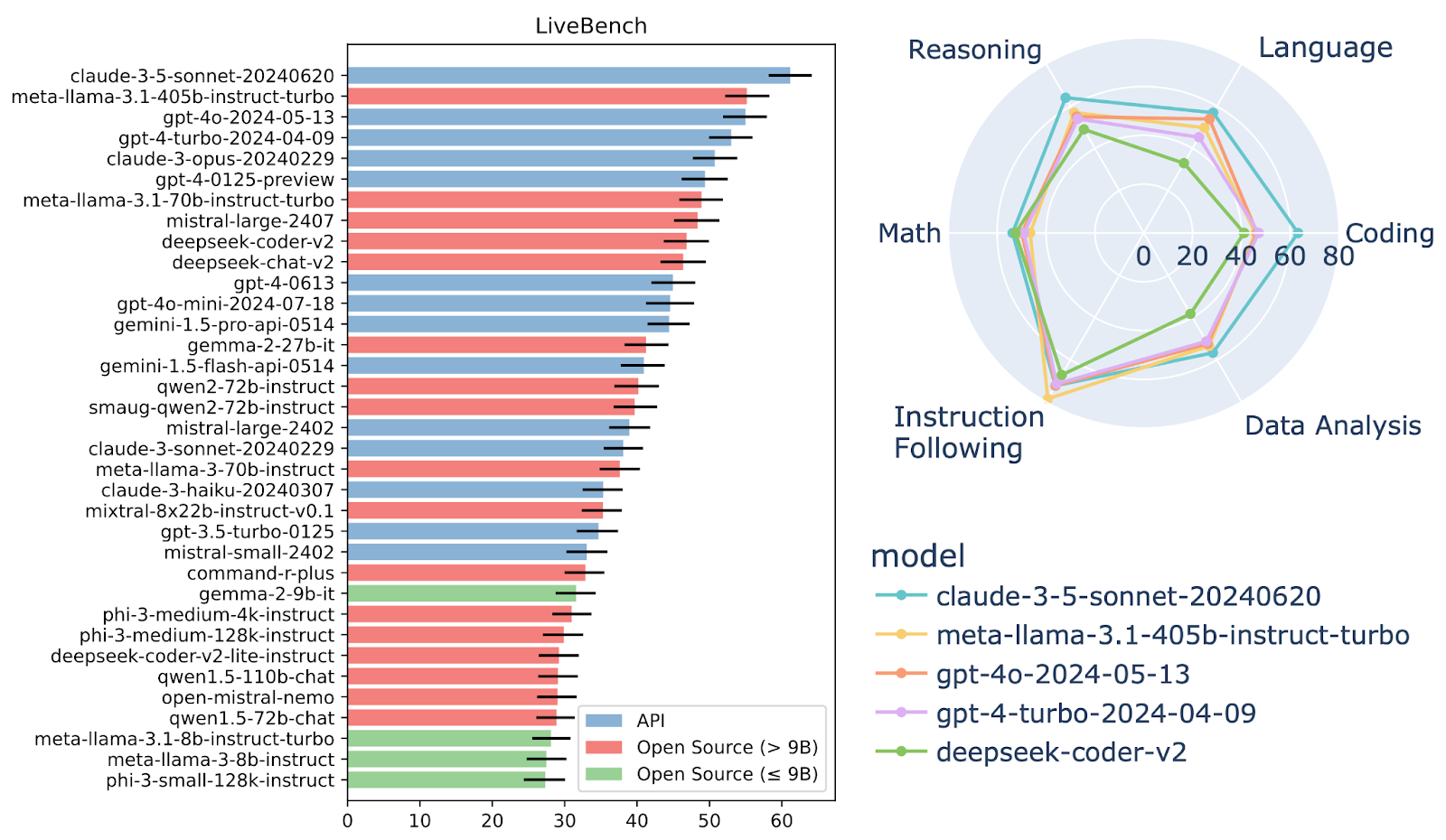

In AI engineering, we say an AI model is great at something when the tests designed to evaluate models, through comparison, on specific tasks using certain datasets and metrics (Q&A, reasoning, code generation, image recognition, sound processing, maths problem resolution, etc) are passed and have demonstrated how "superior" the model is compared to others. Those tests are what we call "Benchmarks".

In other words, AI companies have to work so hard to show how valuable their AI solution or model is for technical customers (those who build end-user applications on top of their model) and for non-technical customers (those who use the AI-powered applications); this is where their end-goal becomes to demonstrate that their AI models can provide a valuable outcome that tells the public they're meeting their expectation and, probably meet their need, the latter not being always the case.

AI Benchmarks != Business value

There is a gap between building an AI model that beats all the other models in benchmarks and delivering business value out of it. Because training a model on a specific set of data, though, helps with getting a powerful model can sometimes be impotent when it comes to real-world scenarios for multiple reasons (I am naming two of them):

1. Benchmarks typically test simplified, static tasks

Most benchmarks are curated academic datasets with limited variability or context. They rarely simulate enterprise complexity: noisy data, diverse user intents, business rules, legal constraints, etc. This is what makes it difficult to deliver business value sometimes. [1]

An AI model scoring 90% on a benchmark can still fail in production because it wasn’t tested under real conditions.

Satya Nadella, CEO of Microsoft, said:

AI’s success must be measured in economic impact, not benchmarks

If you're running a business and want to integrate AI in your workflow, the source of truth is not always in looking at the benchmarks, but it's rather in running multiple tests, with a human in the loop for better supervision and practical insights based on the way the AI model adapts in a specific business context. Jumping straight into the new shiny AI model can be deceptive for companies that seek to use AI for profitability. Why? Because AI Benchmarks can't predict the outcomes. [2]

2) Benchmark scores don’t predict business outcomes

Benchmarks assess technical performance, not business impact, such as:

Revenue uplift

Customer satisfaction

Cost savings

Operational risk reduction

Profitability

Looking at the technical metrics of a model simply doesn’t capture these outcomes. Having our eyes fixed upon an impressive benchmark isn't just enough.

So a top benchmark score doesn’t guarantee ROI (return on investment), adoption, or value creation. [3][4]

I am pretty sure there are many other reasons to prove the point. See reference [5] for more industry-specific reasons.

Maybe the future of AI is... vertical.

The idea of having a vertically trained AI model is that we focus on making it so focused (yet without overfitting) on a specific training dataset of a determined domain or industry that when we're in a business context, with real-world data of the same industry, the business outcome matches the value we are aiming for. This might be where we are heading towards because business requires a more specific context, given that every model is different in its own and requires more focused attention when it comes to applying AI.

Because, in the industry, what we’re seeing is a shift from Horizontal intelligence (general-purpose models optimized for benchmarks) [6] to Vertical intelligence (domain-specific systems optimized for outcomes) [7].

Business Value(AI) = f( Foundation Model,

Domain Context,

Task Alignment,

Real-World Evaluation )Business value from AI isn’t about raw intelligence or benchmark scores. It comes from:

Foundation Model: the core AI capabilities (language, reasoning, understanding).

Domain Context: adapting the AI to your industry’s language, rules, and data.

Task Alignment: aligning the AI to real workflows and business processes.

Real-World Evaluation: measuring performance with actual business outcomes, not abstract tests.

In other words, AI creates value when it’s smart, focused on your business, and proven in real-world conditions.

References

[1] Eriksson, M., Purificato, E., Noroozian, A., Vinagre, J., Chaslot, G., Gómez, E., & Fernández-Llorca, D. (2025). Can we trust AI benchmarks? An interdisciplinary review of current issues in AI evaluation. arXiv. https://arxiv.org/abs/2502.06559

[2] Reuel, A., Hardy, A., Smith, C., Lamparth, M., Hardy, M., & Kochenderfer, M. J. (2024). BetterBench: Assessing AI benchmarks, uncovering issues, and establishing best practices. arXiv. https://arxiv.org/abs/2411.12990

[3] Xite.dev. (2025, May 21). Why traditional AI benchmarks fall short in measuring real-world business impact. https://xite.ai/blogs/why-traditional-ai-benchmarks-fall-short-in-measuring-real-world-business-impact/

[4] Nowak, D. (2025). Why your AI benchmarks are lying to you. https://davidnowak.me/why-your-ai-benchmarks-are-lying-to-you/

[5] LayerLens.ai. (2025). The hidden flaws of AI benchmarks: Why the industry needs a reality check. https://layerlens.ai/reports/the-hidden-flaws-of-ai-benchmarks-why-the-industry-needs-a-reality-check

[6] Bommasani et al. (2021) explicitly state that foundation models require adaptation to domains to create downstream value. https://arxiv.org/abs/2108.07258

[7] Gururangan et al. (2020) show that domain-adaptive pretraining significantly improves downstream task performance. https://arxiv.org/abs/2004.10964

0 Comment